New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Facebook open sources elf opengo #1311

Comments

|

Google, its your turn now! We want AGZ weights asap... One benefit of pushing forward open bots that are superhuman is it may force the hand of others as well, now that Fb has open sourced their bot that they claim is stronger than current LZ, maybe Deepmind will come back for thirds to get one last PR hooray for Google by open sourcing their AGZ weights to spit in fb's face. open competition is good for go. This is truly the end of a human era. ////////// Possible hidden meaning? and a day later: https://www.theguardian.com/technology/2016/jan/28/go-playing-facebook-spoil-googles-ai-deepmind I don't think "the" development is what people think it is refering to.https://www.reddit.com/r/cbaduk/comments/81ri8b/so_many_strong_networksais_on_cgos/dv4pzo5/ I kinda predicted this a while ago actually: ////////// So..... overall ELF is at least >400+ Elo stronger than current best LZ network up to 800 elo. But it appears to have some latent issues such as being even more prone to ladder fallability and other certain fragilities at less than superhigh playouts, which ironically are things that aren't as prelevant in the current LZ net arch. By using the approach of hybrid mix 50% LZ/ 50% ELF self-games and continuing to train the LZ net but using ELF as a part-time strength-gainer games-generator (at least until which point LZ catches up to ELF or even surpasses it) may patch the current weak spots in both net archs and set up a framework that is repeatable into the future, such as if AGZ weights get released or facebook teams releases a (new) and even stronger ELF OpenGo network sometime in the future, etc this can be repeated again. And it gives the LZ project the chance to figure out how to resolve two of the biggest issues of superhuman Go AI, that is ladders, large group deaths, and things like high handicap and variable komi and whatnots. Since the current 15b still has enough capacity left, the adding of ELF games will allow LZ to catch up enough to give the devs a way to figure out how to lower gating or remove gating etc. For sure the continuation of "things as usual" for the LZ project is no longer an option, if for no other reason than the strength gap being too great for volunteer clients to still be willing to go the business as usual path, and as gcp stated, its not an option to train the ELF network directly either. (not to mention that switching directly to the ELF network would be in essence killing off the Leela Zero itself) So looks like the roadmap going forward is to train LZ hybridized with ELF, so it seems like there is a way to make good on the fb post about ELF helping project like Leela Zero after all! |

|

Someone should run a test and see how strong that bot is compared to our own, they say they won 200 games but we don't know out of homany. |

They won all the games. |

|

Quick someone compile and test how strong it is on a single playout. Then we have a better idea of its true strength. |

|

"A go bot that has attained professional level in two weeks." The will hopefully release some more info. |

|

These tests apparently gave Fb's bot about twice the number of PO that it gave to LZ. So this is like what? a 120 elo advantage to Fb0? Anyway he responded with some more info and apparently they also used V100 for the games. Curious to know the wr% against LZ when both using single po. |

|

Their network is 20x224. So 80,000 PO would be equivalent to 145,000 on LZ's 15x192 network? |

|

@diadorak interesting, yeah in that case, he mentioned in the match LZ was getting average of 43000 rollouts per move... |

|

Feels like they rushed this release out the door, (didn't have the 200 sgf, couldn't provide training data, many simple things couldn't be currently answered) perhaps seeing that LZ had already gotten superhuman and it was basically "now or never"... Fingers crossed Google steps in before they/fb get a chance to refine and trump them by dumping all AGZ code, net, data. etc |

That's really impressive. We've had about 200 clients on average over 5 months. A lot of these are weaker machines but lets low-ball it and say equivalent to 100 GPUs over 20 weeks, so about 2000 GPU-weeks as well. That means they didn't just throw compute at the problem but are even more efficient with their learning! |

|

@odeint, they had 4000 GPU x weeks vs 2000 for LZ if your estimation is correct, so not a surprise their network is much stronger: imagine LZ in 4 or 5 months at the current rythm! |

|

For playing LZ they tested equal time for each bot, which is of course fair. But their software is much faster. An LZ implementation that used Nvidia CUDNN might be competitive again. Same for training, they could go faster due to using Nvidia software. |

|

Where are you guys pulling this info from? I can't even find a link to the model they trained. Did they really release it already? :D |

Seems reasonable. There's nothing inherently wrong with LZ approach, on the contrary it's much more general and robust. They released it under BSD style license though. That's a big one. |

They tested equal time, but I think they may have misconfigured it, AZ was only doing enough nodes for the equivalent of using only 3 seconds on some moves, rather than using all of the time available. |

|

Do they release the weight file? Can we convert to LZ's weight file and have a try? |

|

anyone got it to compile? what is its strength on 1 po. They tested in 14 games with pros on even, but Golaxy won 28 out of 30 games against pros with giving them one stone, and Golaxy was less than half a stone stronger than LZ. But yet they managed to win all 200 games against LZ? So what gives? Something is not adding up Its amazing they claimed not enough infrastructure yet to host the training data when asked for it, seriously? they are facebook. plus they said they would have to look for the 200 sgf games they played against LZ once a team member came back from vacation. Like for reals?! I really hope to be proven wrong and that fb's OpenGo is very much stronger than anything we have publicly right now. Was this the US president named bots on cgos? |

|

The four Korean players it played against, Kim Jiseok (Goratings) 3583, Shin Jinseo 3577, Park Yeonghun 3482 and Choi Cheolhan 3467. 14-0 against them, simply amazing result. |

|

Hi all, I am the first author of ELF OpenGo. Thanks for your discussions here! After seeing the comments posted here, we revisited our game logs and found that we were incorrect in our assumption that LeelaZero used a fixed 50s per move schedule. Under our settings LZ uses a variable-time schedule, which typically takes 50 seconds at the beginning of the game and then gradually decays to 17 seconds. So the conclusion is that:

The exact configuration we used to run LeelaZero is: Commit: 97c2f81 Command line: This is what we mean by “default setting without pondering” in our research blogs. The weights are Apr. 25, 192x15, 158603eb (http://zero.sjeng.org/networks/158603eb61a1e5e9dcd1aee157d813063292ae68fbc8fcd24502ae7daf4d7948.gz). This is the most recent public weight file we could find by the time we did the experiments. We would like your help in running a fair comparison as we originally intended. What settings could we use to ensure LZ uses a fixed time of 50s per move? We're happy to rerun the experiments. Thanks for all the interest! |

|

Someone speak up if I'm wrong, but if you're using gomill or a similar tool that can send a gtp command before the game starts, send LZ a "time_settings 0 50 1". I believe this means a 50 second byoyomi period in which to place 1 stone. Thus, it should move within 50 seconds every move. |

|

@marcocalignano "keep it's own mistakes until finally learn it anyway the ELF way?" I do not think there is only one ELF way. ELF has just stronger hardware so it is ahead as AlphaGo is. At least I hope so, otherwise LZ project could become quite boring. Finding your own way is interesting and exciting. Same applies to humans. When LZ was in low kyu level, would you also proposed abandoning it? |

But note that NN learning is more rigid than that (cf. training from human games). Using ELF games with ELF-based search means ELF evaluations are forced into LZ network. And there ARE a few characteristic differences between programs, even Zero-style ones, and LZ undoubtedly gets closer to ELF. I'm not saying this is bad though, but only makes sense if LZ can significantly surpass ELF in the end. Otherwise it would be better to leave the choice to the users, who could simply use ELF as is. |

|

If we train LZ network only on games played by ELF network it will be just supervised learning. No reinforcement part at all. No need to use game window. So 50-50 is just fair I guess. |

|

I don't fully trust ELF weights in LZ. It's much stronger, yes, but it can't really handicap well and the value head is so sharp I'm suspicious of that as well. :) I think GCP plans to cap % of ELF games at 50% of the training window, then scale it back or remove them once LZ is as strong as ELF. But along the way some ELF games mixed in should help LZ learn a bit faster is the hope. |

|

@tapsika "I'm not saying this is bad though, but only makes sense if LZ can significantly surpass ELF in the end. Otherwise it would be better to leave the choice to the users, who could simply use ELF as is." It would be wonderful if ELF people joined our client base for couple weeks with their 2000 of powerful machines . I suspect LZ would skyrocket without using more ELF data. |

|

@roy7 But can you really say that the ELF games are 50% of the total games or is it 50% for the faster client only? |

|

Well the window is 500K games as far as I recall, so once we've generated 250K ELF games we'll stop generating new ones. As far as task allocation I don't see how client speed matters. Some % (I don't recall exactly) of selfplay are given ELF weights. So that % of the total games received from clients will also be that %. |

|

i support Roy in that I am suspicious of the ELF weights. It appears weaker at ladders and worse at handicap games. Also we have not witnessed the ELF learning process, so it remains somewhat a black box (though magic :) ), there could be some unknown caveats that we want to avoid in LZ. Also the sharp value head gives reason to be cautious, though of course it could just mean that it is better in understanding the board evaluation (only a small advantage would be evaluated strongly, assuming both players play strongly). So in short ELF, though a lot stronger, appears to be a little less robust. It could off course be that in general there is a trade-off between robustness and strength for a given network size. In addition, if going to higher percentages of ELF games, why not reboot from ELF altogether? |

|

So it could happen since the window shifts that the percentage changes depending of where the ELF games are concentrated. |

If we have like 250k ELF games you might be able to train a super-ELF. The idea of having both in the training window is to get some higher quality games to accelerate Leela faster, while maybe avoiding some ELF pitfalls by still having Leela games. It's also important to have the Leela games so if it's doing some kind of over-fitting or learning something exploitable, it can see it's own mistakes and correct them in the next training pass, which the pure ELF data cannot. Still it's very much an ad hock construction. But it seems OK so far? Rating graph is nicely steep.

I wouldn't allow more than 50% of the window, but it may be useful to generate more, if someone wants to have a go at making super-ELF. That would push the public state of the art. |

|

"ELF is getting trashed!" |

|

It seems LZ needs much more ladder games to trash ELF. I watched to wins, Those wins were ladder games. |

|

LZ learned the ladders before ELF. Are you saying LZ is forgetting them now? |

|

No, I meant that LZ is better in Ladder games, and could maybe even be better at ladders. |

|

Well, maybe ten more new networks appear. The winrate of ELF will be around 75% :)) |

|

348 : 56 (86.14%) now |

|

Now: 95% Confidence Interval: 0.862 ± 0.0337 (0.828 to 0.896) Therefore the improvement in score is not statistically significant. |

|

As FB ELF games are being used to train Leela Zero, maybe something like pair go games would be worthwhile. E.g. Every move, white has a p_w probability of playing a move generated by best_network and (1-p_w) probability of playing a move generated by ELF. Every move, black has a p_b probability of playing a move generated by best_network and (1-p_b) probability of playing a move generated by ELF. |

|

Just some more info about ELF that I somehow missed 1+ month ago. It's not clear to me whether higher speed refers to that lower playouts cost less time or that no-gating led to faster progress. The AZ approach includes t=1 all game but @yuandong-tian's #1311 (comment) indicates that they only did t=1 for first 30 moves (but maybe he's referring to the AGZ approach only?). (The bug pytorch/pytorch#5801 was that the gradient for KL divergence/cross entropy was incorrectly computed on GPUs, so if you do VAE/GAN which uses KLdiv, take care!) |

|

|

Just for information: LZ (d0187996) just won 3 consecutive games on KGS against elf. I know this is not significant. But if we take in account, that elf uses GTX 1080 ti, while i use GTX 1060, it's at least remarkable :-) http://kgs.gosquares.net/index.rhtml.en?id=petgo3&id2=ELF&r=0 |

|

Can anyone explain me why we use 55% winrate to be promoted? I |

|

@MartinVanEs See #1524 (comment) for discussions. |

|

With fulltuned net 4634d903 (not promoted 52.09%) LZ catches up to Elf on KGS. |

|

@petgo3 what is the meaning of "DF2 version 1.0" in "ELF [-]: GTP Engine for ELF (white): DF2 version 1.0"? |

|

@yuandong-tian : regarding randomness in self-play games, are you using t=1 for the first 30 moves then t=0, as in AGZ paper ? Or another scheme ? |

|

500K self play games |

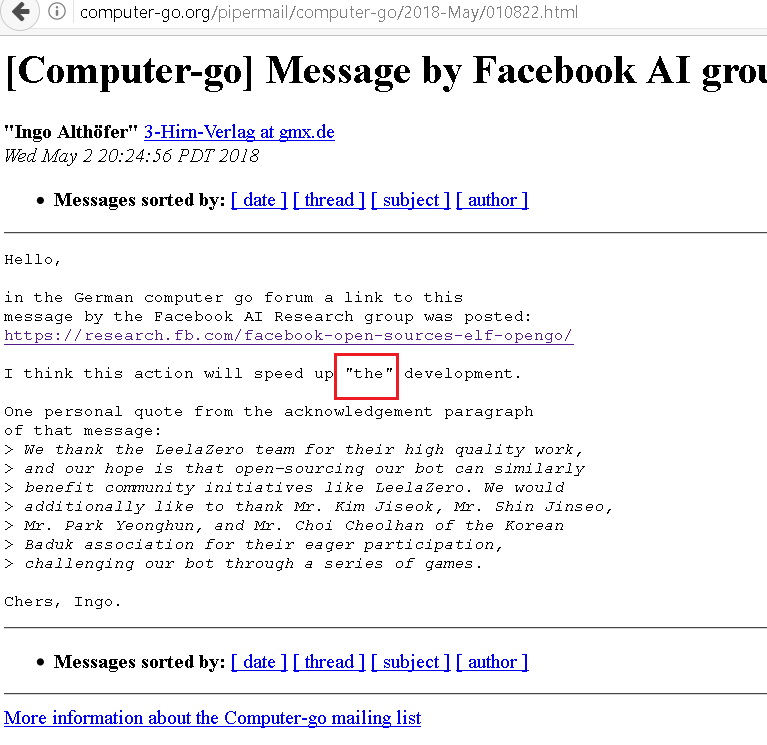

https://research.fb.com/facebook-open-sources-elf-opengo/

ELF OpenGo

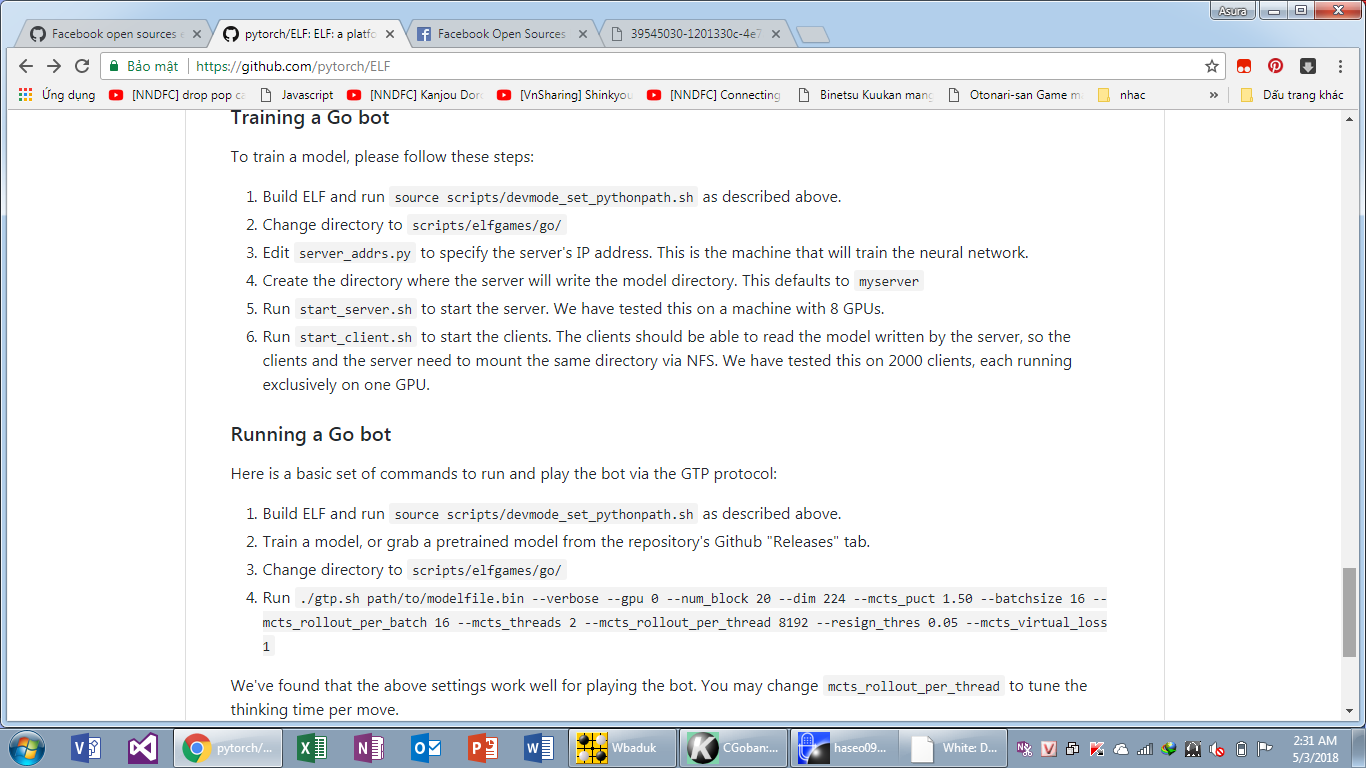

ELF OpenGo is a reimplementation of AlphaGoZero / AlphaZero. It was trained on 2,000 GPUs over a two week period, and has achieved high performance.

"ELF OpenGo has been successful playing against both other open source bots and human Go players. We played and won 200 games against LeelaZero (158603eb, Apr. 25, 2018), the strongest publicly available bot, using its default settings and no pondering. We also achieved a 14 win, 0 loss record against four of the top 30 world-ranked human Go players."

"We thank the LeelaZero team for their high quality work, and our hope is that open-sourcing our bot can similarly benefit community initiatives like LeelaZero"

Github: https://github.com/pytorch/elf

The text was updated successfully, but these errors were encountered: