New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Multi-Layered Docker Images #47411

Multi-Layered Docker Images #47411

Conversation

Using a simple algorithm, convert the references to a path in to a

sorted list of dependent paths based on how often they're referenced

and how deep in the tree they live. Equally-"popular" paths are then

sorted by name.

The existing writeReferencesToFile prints the paths in a simple

ascii-based sorting of the paths.

Sorting the paths by graph improves the chances that the difference

between two builds appear near the end of the list, instead of near

the beginning. This makes a difference for Nix builds which export a

closure for another program to consume, if that program implements its

own level of binary diffing.

For an example, Docker Images. If each store path is a separate layer

then Docker Images can be very efficiently transfered between systems,

and we get very good cache reuse between images built with the same

version of Nixpkgs. However, since Docker only reliably supports a

small number of layers (42) it is important to pick the individual

layers carefully. By storing very popular store paths in the first 40

layers, we improve the chances that the next Docker image will share

many of those layers.*

Given the dependency tree:

A - B - C - D -\

\ \ \ \

\ \ \ \

\ \ - E ---- F

\- G

Nodes which have multiple references are duplicated:

A - B - C - D - F

\ \ \

\ \ \- E - F

\ \

\ \- E - F

\

\- G

Each leaf node is now replaced by a counter defaulted to 1:

A - B - C - D - (F:1)

\ \ \

\ \ \- E - (F:1)

\ \

\ \- E - (F:1)

\

\- (G:1)

Then each leaf counter is merged with its parent node, replacing the

parent node with a counter of 1, and each existing counter being

incremented by 1. That is to say `- D - (F:1)` becomes `- (D:1, F:2)`:

A - B - C - (D:1, F:2)

\ \ \

\ \ \- (E:1, F:2)

\ \

\ \- (E:1, F:2)

\

\- (G:1)

Then each leaf counter is merged with its parent node again, merging

any counters, then incrementing each:

A - B - (C:1, D:2, E:2, F:5)

\ \

\ \- (E:1, F:2)

\

\- (G:1)

And again:

A - (B:1, C:2, D:3, E:4, F:8)

\

\- (G:1)

And again:

(A:1, B:2, C:3, D:4, E:5, F:9, G:2)

and then paths have the following "popularity":

A 1

B 2

C 3

D 4

E 5

F 9

G 2

and the popularity contest would result in the paths being printed as:

F

E

D

C

B

G

A

* Note: People who have used a Dockerfile before assume Docker's

Layers are inherently ordered. However, this is not true -- Docker

layers are content-addressable and are not explicitly layered until

they are composed in to an Image.

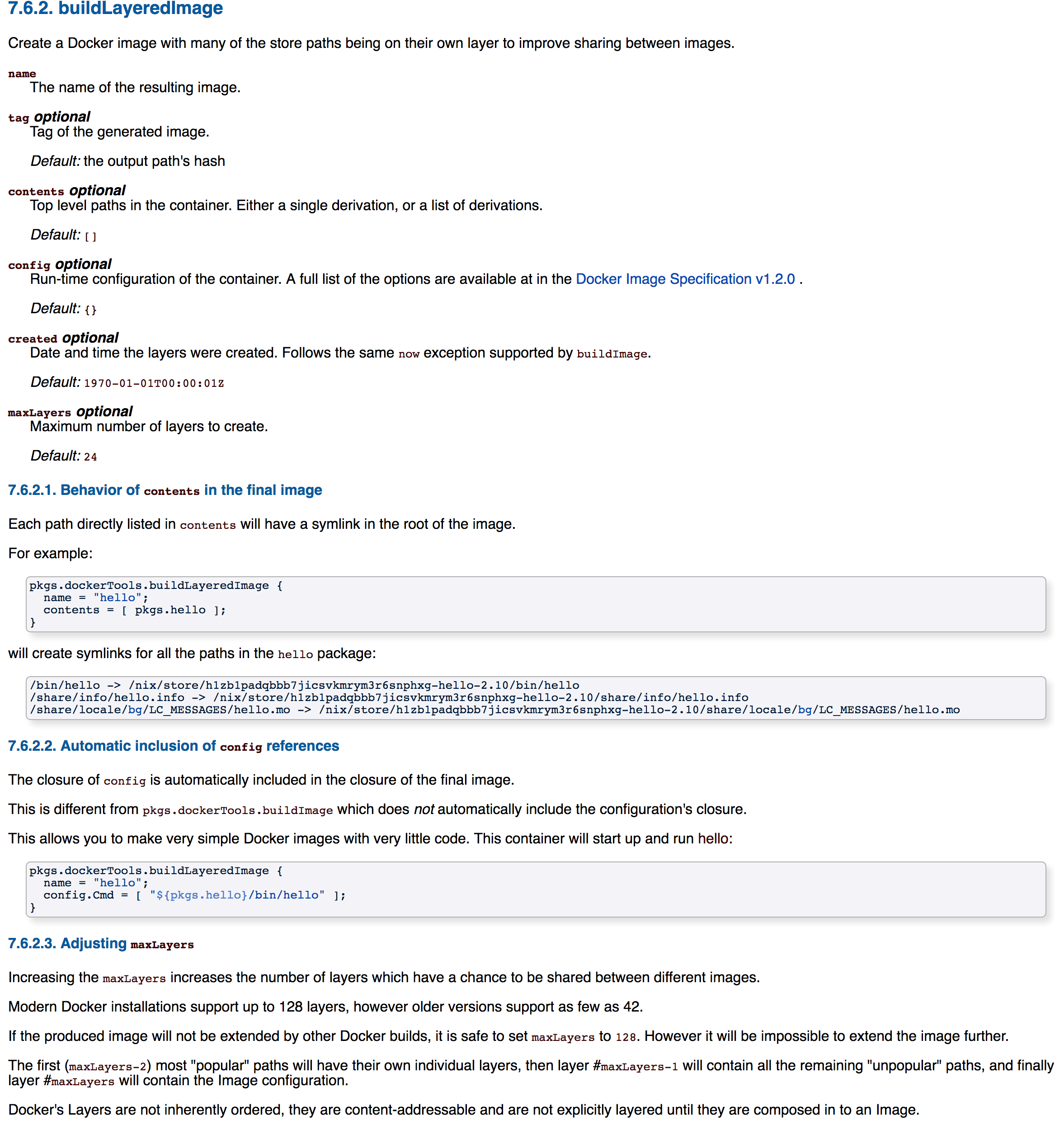

Create a many-layered Docker Image.

Implements much less than buildImage:

- Doesn't support specific uids/gids

- Doesn't support runninng commands after building

- Doesn't require qemu

- Doesn't create mutable copies of the files in the path

- Doesn't support parent images

If you want those feature, I recommend using buildLayeredImage as an

input to buildImage.

Notably, it does support:

- Caching low level, common paths based on a graph traversial

algorithm, see referencesByPopularity in

0a80233487993256e811f566b1c80a40394c03d6

- Configurable number of layers. If you're not using AUFS or not

extending the image, you can specify a larger number of layers at

build time:

pkgs.dockerTools.buildLayeredImage {

name = "hello";

maxLayers = 128;

config.Cmd = [ "${pkgs.gitFull}/bin/git" ];

};

- Parallelized creation of the layers, improving build speed.

- The contents of the image includes the closure of the configuration,

so you don't have to specify paths in contents and config.

With buildImage, paths referred to by the config were not included

automatically in the image. Thus, if you wanted to call Git, you

had to specify it twice:

pkgs.dockerTools.buildImage {

name = "hello";

contents = [ pkgs.gitFull ];

config.Cmd = [ "${pkgs.gitFull}/bin/git" ];

};

buildLayeredImage on the other hand includes the runtime closure of

the config when calculating the contents of the image:

pkgs.dockerTools.buildImage {

name = "hello";

config.Cmd = [ "${pkgs.gitFull}/bin/git" ];

};

Minor Problems

- If any of the store paths change, every layer will be rebuilt in

the nix-build. However, beacuse the layers are bit-for-bit

reproducable, when these images are loaded in to Docker they will

match existing layers and not be imported or uploaded twice.

Common Questions

- Aren't Docker layers ordered?

No. People who have used a Dockerfile before assume Docker's

Layers are inherently ordered. However, this is not true -- Docker

layers are content-addressable and are not explicitly layered until

they are composed in to an Image.

- What happens if I have more than maxLayers of store paths?

The first (maxLayers-2) most "popular" paths will have their own

individual layers, then layer #(maxLayers-1) will contain all the

remaining "unpopular" paths, and finally layer #(maxLayers) will

contain the Image configuration.

|

I'm not sure I understand exactly how your "automatic closure inclusion" differs from Using Also adding it to contents just creates the moral "buildEnv" in / |

|

Great catch, @srhb -- fixed up my docs. Not sure where I got that idea. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looks really promising… gotta find some time to try it out though 👼

@grahamc I wonder if at some point we could/should build OCI images (that said… docker cannot import oci image as of today 😓)

|

@grahamc It would be nice to add a basic test (build and run a layered image) in @vdemeester Docker cannot, but Skopeo can convert a OCI image to a Docker image! I already tried a bit to use Buildah to build an image instead of our shell scripts, but still wip... |

|

Nice work @grahamc! It seems to me that small packages might be a problem if there are too many, but it may not be a problem in practice. If it is, there may be another heuristic to cluster the small ones in a clever way. It looks very compelling! |

Test added!

Unfortunately trying to combine any paths makes it much less likely that different images would share anything. Small packages don't seem to be such a problem or cause issue. I've tested this with images containing python, fortran, java, haskell, php, bash... sometimes all at once :) |

I did run into this issue building images based on nodejs with ~800 packages in one image. |

|

What was the issue exactly? It should handle that case appropriately.

… On Sep 27, 2018, at 10:50 PM, adisbladis ***@***.***> wrote:

It seems to me that small packages might be a problem if there are too many, but it may not be a problem in practice. If it is, there may be another heuristic to cluster the small ones in a clever way.

Well, that's just me speculating.

I did run into this issue building images based on nodejs with ~800 packages in one image.

I'm thinking that this PR is a good general approach to the problem but we may need to take a different approach in some pathological cases.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

|

I tried building images with large numbers of dependencies like quassel-webserver and azure-cli. Not 800, but a large number none the less. I found that base layers were well selected: glibc, nodejs, ncurses, etc. I think if you want greater control on what layers are created, buildImage is for you. |

|

Thanks! |

|

Great! I was not aware that there was a practical limit on the number of layers in an image. I wrote https://github.com/ContainerSolutions/nixpkgs-overlay/blob/master/docker-tools.nix in last April. It differs by building the layers as separate derivations that can be cached by Nix. That might speed up building images quite a bit actually, by doing the minimal amount of work possible. It also offers to types of outputs: a directory of image layers, and a final tar file that is accepted by |

|

Indeed, a hard limit at 125: https://github.com/moby/moby/blob/b3e9f7b13b0f0c414fa6253e1f17a86b2cff68b5/layer/layer_store.go#L23-L26 it looks like your code there uses IFD. is that right? |

|

Hm. I only tried small programs so far ;) Too bad that docker has this this limitation... My code also only relies on |

|

Ah, yeah, unfortunately that does do a build and then imports it, so we can't use it in Nixpkgs. |

|

@graham-at-target Cool work ! I just read your blog post on this, and I was wondering: would it be possible to merge together some of the very common layers if they are often used together ? |

|

@Nadrieril I had the same thought, that it's probably better to explicitly cut the image into layers at certain known points like It's by no means done since I didn't have much time yet, here is the current progress: https://gist.github.com/adisbladis/777c7d8240be35faa107bc8d6c869a9f. I plan to add some minor features and port all the python code to nix before I consider it done. |

|

I did experiment with a similar idea: a layer per enumerated input. However I found it was less efficient for many of my images and caused them to be much more brittle: breaking the abstractions set up by nixpkgs.

I also, yes, did attempt to combine some paths in to one layer but it caused the cache hits to be less frequent between images.

In general I found that with a maxLayers set to 120 almost every image fit nicely without many paths in the final layer. (Note: this pr merged before I could update it, but all overlay drivers docker uses supports up to 125 now, so I will be updating the default.)

… On Oct 2, 2018, at 4:24 AM, adisbladis ***@***.***> wrote:

@Nadrieril I had the same thought, that it's probably better to explicitly cut the image into layers at certain known points like nodejs, python3, glibc etc.

It's by no means done since I didn't have much time yet, here is the current progress: https://gist.github.com/adisbladis/777c7d8240be35faa107bc8d6c869a9f

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

@grahamc this is delightful, thanks! |

Create a many-layered Docker Image.

Implements much less than buildImage:

If you want those feature, I recommend using buildImage.

Notably, it does support:

Caching low level, common paths based on a graph traversial

algorithm, see later in this description.

Configurable number of layers. If you're not using AUFS or not

extending the image, you can specify a larger number of layers at

build time:

Parallelized creation of the layers, improving build speed.

The contents of the image includes the closure of the configuration,

so you don't have to specify paths in contents and config.

With buildImage, paths referred to by the config were not included

automatically in the image. Thus, if you wanted to call Git, you

had to specify it twice:

buildLayeredImage on the other hand includes the runtime closure of

the config when calculating the contents of the image:

Minor Problems

the nix-build. However, beacuse the layers are bit-for-bit

reproducable, when these images are loaded in to Docker they will

match existing layers and not be imported or uploaded twice.

Common Questions

Aren't Docker layers ordered?

No. People who have used a Dockerfile before assume Docker's

Layers are inherently ordered. However, this is not true -- Docker

layers are content-addressable and are not explicitly layered until

they are composed in to an Image.

What happens if I have more than maxLayers of store paths?

The first (maxLayers-2) most "popular" paths will have their own

individual layers, then layer #(maxLayers-1) will contain all the

remaining "unpopular" paths, and finally layer #(maxLayers) will

contain the Image configuration.

Popularity Contest Algorithm

Using a simple algorithm, convert the references to a path in to a

sorted list of dependent paths based on how often they're referenced

and how deep in the tree they live. Equally-"popular" paths are then

sorted by name.

The existing writeReferencesToFile prints the paths in a simple

ascii-based sorting of the paths.

Sorting the paths by graph improves the chances that the difference

between two builds appear near the end of the list, instead of near

the beginning. This makes a difference for Nix builds which export a

closure for another program to consume, if that program implements its

own level of binary diffing.

For an example, Docker Images. If each store path is a separate layer

then Docker Images can be very efficiently transfered between systems,

and we get very good cache reuse between images built with the same

version of Nixpkgs. However, since Docker only reliably supports a

small number of layers (42) it is important to pick the individual

layers carefully. By storing very popular store paths in the first 40

layers, we improve the chances that the next Docker image will share

many of those layers.*

Given the dependency tree:

Nodes which have multiple references are duplicated:

Each leaf node is now replaced by a counter defaulted to 1:

Then each leaf counter is merged with its parent node, replacing the

parent node with a counter of 1, and each existing counter being

incremented by 1. That is to say

- D - (F:1)becomes- (D:1, F:2):Then each leaf counter is merged with its parent node again, merging

any counters, then incrementing each:

And again:

And again:

and then paths have the following "popularity":

and the popularity contest would result in the paths being printed as:

Things done

sandboxinnix.confon non-NixOS)nix-shell -p nox --run "nox-review wip"./result/bin/)nix path-info -Sbefore and after)This PR was written while working for Target.

cc @adelbertc @shlevy @stew